As we all know caching refers to storing frequently used or dealt-up data in temporary high-speed storage to reduce the latency of a system. So we do the same when happens inside a Redis cluster. Therefore, Redis Cache supercharges application performance by utilizing in-memory data caching. By storing frequently accessed data in memory, Redis Cache dramatically reduces response times and database load, resulting in faster and more scalable applications.

Caching

in Redis

Redis, often

referred to as a “data structure server,” is known for its exceptional

performance and versatility. While Redis offers a wide range of features, one

of its primary use cases is data caching.

- Redis caching leverages its

in-memory storage capabilities, allowing applications to store and

retrieve data with extremely low latency.

- It provides key-value storage,

allowing developers to store and retrieve data using unique keys.

- With Redis, developers can set

expiration times for cached data, ensuring that the cache remains up to

date.

- Additionally, Redis offers

advanced features like pub/sub messaging, which can be leveraged to

implement cache invalidation mechanisms.

- By using Redis for caching,

applications can significantly enhance their performance, reduce response

times, and improve overall scalability.

Best

Practices for Redis Caching

It is

important to consider a few best practices when working with Redis caching:

- Identify the Right Data to Cache:

Not all data needs to be cached. Focus on caching data that is frequently

accessed or computationally expensive to generate. This includes data that

doesn’t change frequently or can be shared across multiple requests.

- Set Expiration Policies:

Determine an appropriate expiration policy for cached data. This ensures

that the cache remains up to date and avoids serving stale data. Set

expiration times based on the frequency of data updates and the desired

freshness of the cached data.

- Implement Cache Invalidation:

When the underlying data changes, it is essential to invalidate or update

the corresponding cache entries. This can be done by using techniques such

as cache invalidation triggers or monitoring changes in the data source.

- Monitor Cache Performance:

Regularly monitor the performance of the cache to ensure its

effectiveness. Keep an eye on cache hit rates, cache misses, and overall

cache utilization. Monitoring can help identify potential bottlenecks or

areas for optimization.

- Scale Redis for High Traffic: As

your application’s traffic grows, consider scaling Redis to handle the

increased load. This can involve using Redis clusters or replication to

distribute the data across multiple instances and increase read and write

throughput.

By following

these best practices, you can maximize the benefits of Redis caching and create

high-performance applications. Remember that caching is a powerful tool, but it

should be used judiciously and in combination with other performance

optimization techniques.

Implementation

of caching in Redis

In this

section, we will explore the step-by-step implementation of Redis caching in an

application. We will cover the following subtopics with code snippets and

examples:

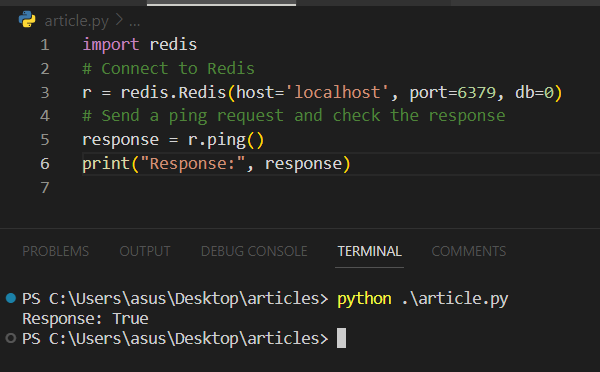

Step:1 Establishing a Connection with Redis

To begin, we

need to establish a connection with the Redis server. The following code

snippet demonstrates how to connect to Redis and check if the connection is

successful by sending a ping request and receiving the response as “True.”

```pip install redis```

- Python3

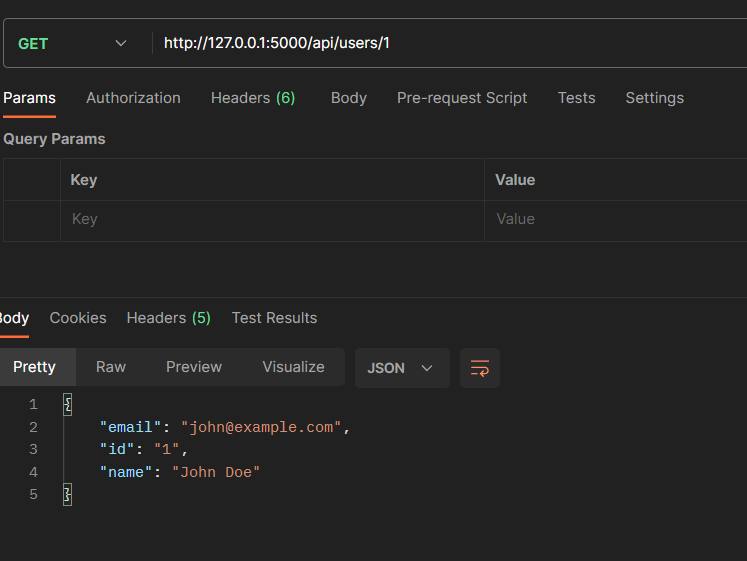

Step:2

Defining External Routes from the API

Next, we

define the external routes from our API that will be responsible for fetching

data. These routes can be endpoints that retrieve data from a database,

external APIs, or any other data source. Here is an example of defining an API

route to fetch user data:

- Python3

Step:3 Establishing Caching with Redis

To implement

caching, we can utilize Redis to store and retrieve data. The code snippet

below demonstrates how to establish caching by checking if the requested data

is available in the cache. If not, it fetches the data from the external route

and stores it in Redis for future requests.

- Python3

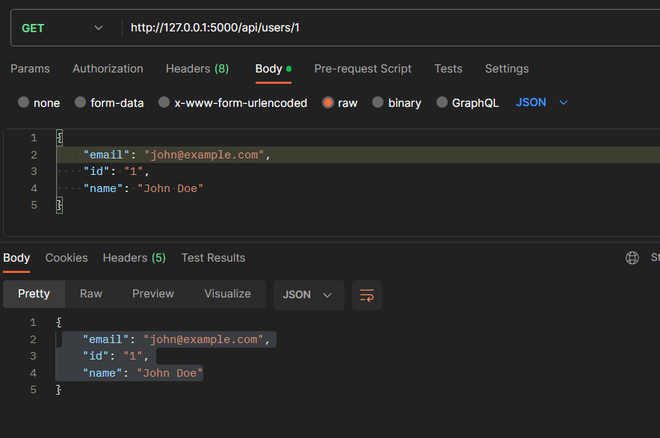

Step:4 Comparing Data from the Cache

To showcase

the effectiveness of caching, we can compare the data retrieved from the cache

with the data fetched from the external route. The following code snippet

demonstrates how to compare the received data from the cache with data fetched

from the external route:

- Python3

By comparing

the data retrieved from the cache with the data fetched from the external

route, we can validate the effectiveness of caching. If the cached data matches

the data fetched from the external route, it indicates that the caching

mechanism is successfully serving the data from the cache, thereby reducing the

need to fetch data from slower data sources.

Conclusion

Redis

caching provides a powerful solution for optimizing application performance by

storing frequently accessed data in memory. By leveraging Redis’s in-memory

storage capabilities, applications can significantly reduce response times and

database load. In this article, we explored the fundamentals of caching,

introduced Redis as a caching solution, and demonstrated the step-by-step

implementation of Redis caching using code snippets and examples.

Implementing Redis caching can lead to substantial performance improvements, especially for applications that rely on fetching data from databases or external APIs. By reducing the time required to retrieve data, applications can deliver faster responses, enhance user experiences, and scale more efficiently. Remember, effective caching strategies involve carefully determining which data to cache, setting appropriate expiration policies, and regularly monitoring cache performance to ensure optimal results. Redis caching, when utilized correctly, can be a game-changer in achieving high-performance applications.

No comments:

Post a Comment