We've already explore Compute Engine–which is Google Cloud's infrastructure as a

service offering, with access to servers, file systems, and networking–and App

Engine, which is Google Cloud's platform as a service offering.

What are containers?

A container is an invisible box around your code and its dependencies with limited

access to its own partition of the file system and hardware. It only requires a few

system calls to create and it starts as quickly as a process. All that’s needed on each

host is an OS kernel that supports containers and a container runtime.

In essence, the OS and dependencies are being virtualized. A container gives you the

best of two worlds - it scales like Platform as a Service (PaaS) but gives you nearly

the same flexibility as Infrastructure as a Service (IaaS.) This makes your code ultra

portable, and the OS and hardware can be treated as a black box. So you can go from

development, to staging, to production, or from your laptop to the cloud, without

changing or rebuilding anything.

In short, containers are portable, loosely coupled boxes of application code and

dependencies that allow you to “code once, and run anywhere.”

You can scale by duplicating single containers.As an example, let’s say you want to launch and then scale a web server. With a

container, you can do this in seconds and deploy dozens or hundreds of them,

depending on the size or your workload, on a single host. This is because containers

can be easily “scaled” to meet demand.

This is just a simple example of scaling one container which is running your whole

application on a single host.

A product that helps manage and scale containerized applications is Kubernetes. So

to save time and effort when scaling applications and workloads, Kubernetes can be

bootstrapped using Google Kubernetes Engine or GKE.

So, what is Kubernetes? Kubernetes is an open-source platform for managing

containerized workloads and services. It makes it easy to orchestrate many

containers on many hosts, scale them as microservices, and easily deploy rollouts

and rollbacks.

At the highest level, Kubernetes is a set of APIs that you can use to deploy containers

on a set of nodes called a cluster.

The system is divided into a set of primary components that run as the control plane

and a set of nodes that run containers. In Kubernetes, a node represents a computing

instance, like a machine. Note that this is different to a node on Google Cloud which is

a virtual machine running in Compute Engine.

You can describe a set of applications and how they should interact with each other,

and Kubernetes determines how to make that happen.

Deploying containers on nodes by using a wrapper around one or more containers is

what defines a Pod. A Pod is the smallest unit in Kubernetes that you create or deploy.

It represents a running process on your cluster as either a component of your

application or an entire app.

Generally, you only have one container per pod, but if you have multiple containers

with a hard dependency, you can package them into a single pod and share

networking and storage resources between them. The Pod provides a unique network

IP and set of ports for your containers and configurable options that govern how your

containers should run.

One way to run a container in a Pod in Kubernetes is to use the kubectl run

command, which starts a Deployment with a container running inside a Pod.

A Deployment represents a group of replicas of the same Pod and keeps your Pods

running even when the nodes they run on fail. A Deployment could represent a

component of an application or even an entire app.

To see a list of the running Pods in your project, run the command:

$ kubectl get pods

Kubernetes creates a Service with a fixed IP address for your Pods, and a controller

says "I need to attach an external load balancer with a public IP address to that

Service so others outside the cluster can access it".

In GKE, the load balancer is created as a network load balancer.

Any client that reaches that IP address will be routed to a Pod behind the Service. A

Service is an abstraction which defines a logical set of Pods and a policy by which to

access them.

As Deployments create and destroy Pods, Pods will be assigned their own IP

addresses, but those addresses don't remain stable over time.

A Service group is a set of Pods and provides a stable endpoint (or fixed IP address)

for them.

For example, if you create two sets of Pods called frontend and backend and put

them behind their own Services, the backend Pods might change, but frontend Pods

are not aware of this. They simply refer to the backend Service.

You can still reach your endpoint as before by using

kubectl get services to get

the external IP of the Service and reach the public IP address from a client.

Benefits of running GKE clusters :

Running a GKE cluster comes with the benefit of advanced cluster management

features that Google Cloud provides. These include:

● Google Cloud's load-balancing for Compute Engine instances.

● Node pools to designate subsets of nodes within a cluster for additional

flexibility.

● Automatic scaling of your cluster's node instance count.

● Automatic upgrades for your cluster's node software.

● Node auto-repair to maintain node health and availability.

● Logging and monitoring with Google Cloud Observability for visibility into your

cluster

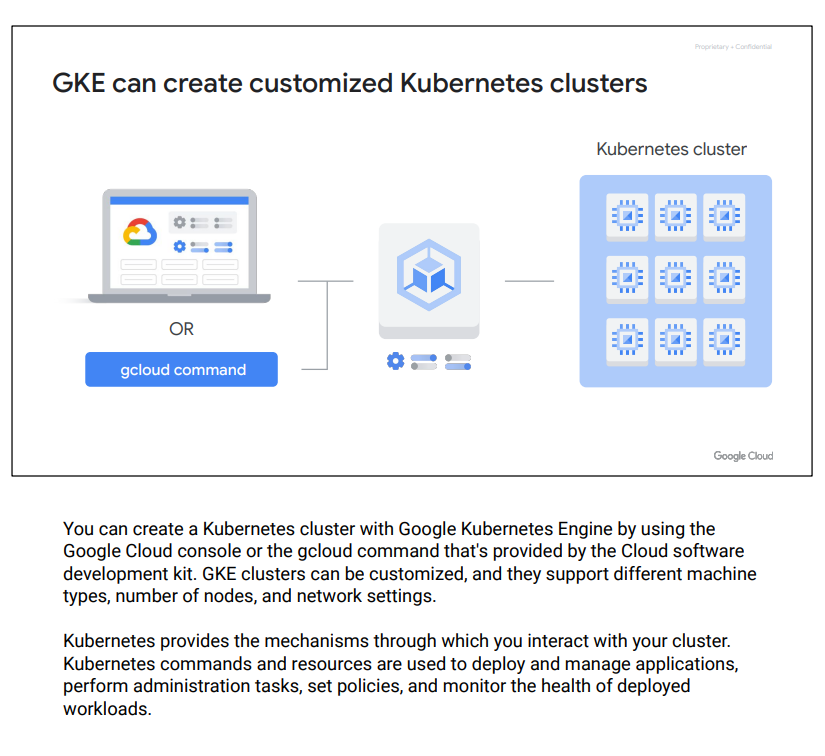

To start up Kubernetes on a cluster in GKE, all you do is run this command:

$> gcloud container clusters create k1

No comments:

Post a Comment