CI/CD: An introduction using Bitbucket pipelines

Every productive application requires an automated deployment strategy to ensure stability and 0% downtime. If you are not sure why Continuous integration & delivery/deployment (CI/CD) is a necessity and not a luxury, then this article is for you.

First I will go over what is CI/CD and why you should add it to your tool belt.

Later, using Bitbucket pipelines, a popular integrated service, I will explain how you can set up your own deployment pipelines for your personal projects.

What is CI/CD?

At its core, for cloud applications, CI/CD is an automated process that generally runs on a server to run different levels of automated tests on your codebase before it is deployed to an environment ( not necessarily production ) or before your changes are merged into the master branch. There’s much more that is done other than running tests such as linting, white-source checks, translations etc.

You can run any process you want when your code is pushed or merged to any branch. You can also run different processes depending on specific changes to specific files that are being pushed to specific branches. Hence, you can be as granular as you want.

Now we can go back to why we need CI/CD.

Why use CI/CD?

Imagine you a portfolio project that you want to showcase to potential clients or an MVP for your next big startup.

It works perfectly on your machine.

You have even deployed it to platforms like Heroku or Vercel.

You have asked your friends to test it. Everything looks perfect.

But it needs to be deployed to production.

Production environments are different from staging and testing environments. Applications that are in production use production databases and services. This means that real users/customers/testers will be using your product and their data has to be persisted in a secure and reliable way.

There is no margin for error here. If a bug in production deletes or corrupts data, this can cause huge problems for you as a developer. Your clients or stakeholders assume that you already have implemented disaster recovery strategies as a fallback. But it is imperative for you as a professional to prevent such events in the first place.

One of the tools that developers use to avoid such mishaps is CI/CD.

Let’s go over them.

The continuous integration methodology (CI)

It ensures that each change pushed to master or any other branch goes through a set of tests, making sure that the production build is not broken in any way. This helps projects where many developers are pushing to the same master branch and is a vital process in every software development project.

The continuous delivery methodology (CD)

It is used to make sure that your codebase (master branch) is always in a state that is ready for production. This is ensured by running tests and other processes before merges to master. This can allow your QA or Operation teams to promote your candidate environments to production using the master branch.

The continuous deployment methodology (CD)

It is used to ensure that changes are deployed to production continuously to receive feedback from users and iron out the bugs immediately. Small and frequent changes are pushed to production which are easier to manage as compared to deploying a bunch of code at once. This requires a lot of upfront investment and preparation as things can go south rapidly if the automated tests are not implemented with enough foresight. This is by far the hardest to implement.

How to use CI/CD?

There are many services available for you to get started namely Circle CI, Github Actions, Jenkins etc.

Since I use Bitbucket pipelines at work, I’ll be using it for you to get started.

To follow along you will need your repo to be hosted in Bitbucket.

Using the Bitbucket pipelines API and some additional setup, you can still use the examples if your repo is hosted in another code hosting platform such as Github, Gitlab etc.

To use Bitbucket pipelines, you need to define a configuration file called bitbucket-pipelines.yml. This file is a set of instructions that you define for the pipelines to follow.

Lets look at a bitbucket-pipelines.yml file.

definitions:

images:

- image: &my-node

name: node:16

- image: &my-node-old

name: node:14

steps:

- step: &build

name: build

image: *my-node

script:

- npm build

artifacts:

- dist/**

- step: &test

name: test

image: *my-node-old

caches:

- node

script:

- npm install

- npm test

artifacts:

download: false

- step: &configure-promotion

name: Configure promotion

script:

- echo export REGION=’us’ > deploy.env

artifacts:

- deploy.env

- step: &promote

name: Call promotion pipeline

script:

- if [ -f deploy.env ]; then source deploy.env; fi

- pipe: atlassian/trigger-pipeline:5.0.0

variables:

BITBUCKET_USERNAME: $BITBUCKET_USERNAME

BITBUCKET_APP_PASSWORD: $BITBUCKET_PASSWORD

REPOSITORY: ‘my-promoter-app’

CUSTOM_PIPELINE_NAME: ‘promote-pipeline’

PIPELINE_VARIABLES: >

[{

"key": "SOURCE_APP",

"value": "my-main-app"

},

{

"key": "REGION",

"value": $REGION

},

{

"key": "SOURCE_COMMIT",

"value": "$SOURCE_COMMIT"

}]

WAIT: “true”

pipelines:

default:

- step: *test

- step: *build

branches:

master:

- step: *test

- step: *build

- step: *configure-promotion

- step: *promote

custom:

build-app:

- step: *test

- step: *build

deploy-app:

- variables:

- name: REGION

- name: SOURCE_COMMIT

- step: *promote-step

build-deploy-app:

- variables:

- name: REGION

- step: *test

- step: *build

- step: *configure-promotion

- step: *promoteIf this seems like a lot, don’t fret. I purposely bloated the configuration file a bit to write about the different cool things you are able to do.

While they do have their place, I personally do not like simplified ‘hello world’ examples as they are not always applicable to real situations. Hence, the large file.

Images

Images define the build environment where the pipelines will run. If you are familiar with Docker images, it is just that. An image is comparable to a snapshot in virtual machine (VM) environments.

Here I have defined two images with different node versions and named them my-node and my-node-old. This way you can use different versions of node for different processes later.

images:

- image: &my-node

name: node:16

- image: &my-node-old

name: node:14Pipelines

Pipelines are processes consisting of steps that can run in sequence or parallel. To make your pipelines modular, you can define the steps in a separate section and point to them here. In the following code snippet, I have two steps test and build that are part of the default process. I will go over their implementation later.

pipelines:

default:

- step: *test

- step: *buildThe default process runs everytime a developer pushes a commit to any branch, hence following the Continuous Integration (CI) methodology. Every time you push a commit to a branch, automated tests run and ensure the stability of your work once the feature you developed is merged to master.

Branches

If you want a process to run when commits are merged to a specific branch, you can set it up easily in this fashion:

branches:

master:

- step: *test

- step: *build

- step: *configure-promotion

- step: *promoteThis section is almost always used to deploy your code to a staging/test environment when a pull request is merged into the master branch. This plays well into the Continuous Deployment (CD) concept where small and frequent deployments are the name of the game.

In the example above, we promote the app to some staging/test environment after running our automated tests. This way the QA team will always be testing the latest master branch.

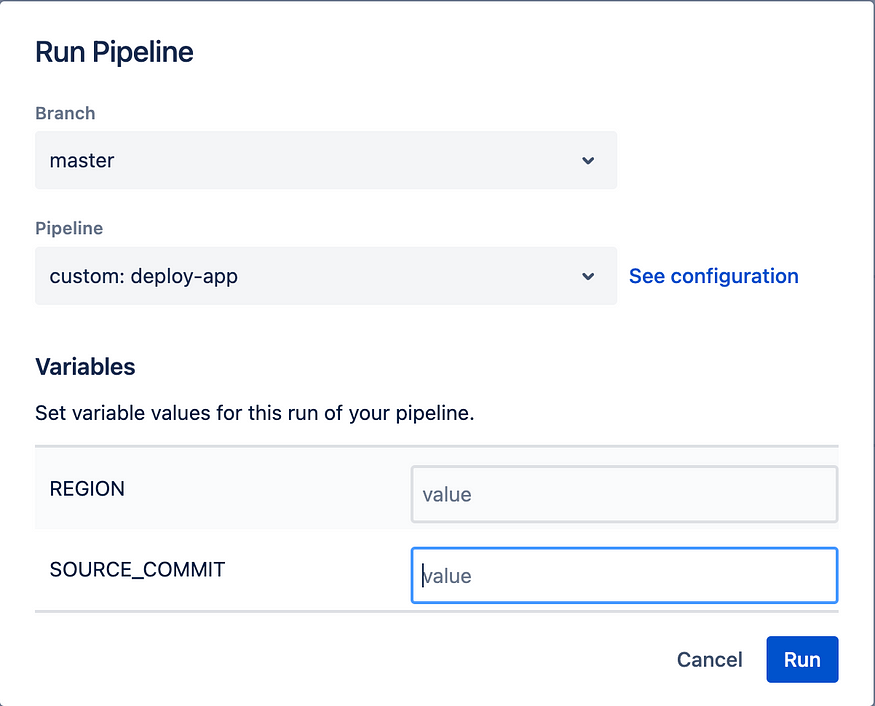

Custom pipelines

There are many times where your QA, operation and content teams will want to play with a particular version of the app that corresponds to a particular commit. Or it could be that they want to test a particular service that is only available in a certain region. You as a developer can enable them to run custom pipelines. These custom pipelines can be run straight from the Bitbucket UI making it easier for them to use.

Custom pipelines can also be triggered programmatically by other pipelines and by using the Bitbucket API. We will see this later.

Below are great examples of three such custom pipelines that are often used:

custom:

build-app:

- step: *test

- step: *build

deploy-app:

- variables:

- name: REGION

- name: SOURCE_COMMIT

- step: *promote-step

build-deploy-app:

- variables:

- name: REGION

- step: *test

- step: *build

- step: *configure-promotion

- step: *promoteNotice that in the deploy-app and build-deploy-app processes, there are variables, namely REGION and SOURCE_COMMIT that have to be set before starting the respective pipelines. They can be manually in the Bitbucket UI like so:

Steps

Steps, as hinted before, are implementations of stages in a process. It is here where you’ll get into the nitty-gritty and execute scripts.

steps:

- step: &build

name: build

image: *my-node

script:

- npm build

artifacts:

- dist/**In the step build above, we are using the my-node image that corresponds to node:16. Moving on, you’ll find the script section with the actual command to run the build.

npm buildYou can run multiple commands in sequence by providing such scripts. Below you’ll find another step test that runs two commands. They install dependencies and then run automated tests.

- step: &test

name: test

image: *my-node-old

caches:

- node

script:

- npm install

- npm test

artifacts:

download: falseNote that here I use the my-node-old image because my hypothetical test cases only run on an older node:14 version.

I have also configured the pipelines to cache the node_modules. This ensures that after the first run, where npm install will download all dependencies, the cache is used instead. This is because in general, dependencies do not change frequently and doing so speeds up the pipelines.

You may also have noticed the artifacts section where I am telling the pipeline to not download any artifacts. I will explain what they are soon.

In the next step, we configure the promotion system. In reality, this step is very verbose with a lot of environment variables and other configuration scripts. Here however, I just set one REGION variable to US simulating a real life scenario where your app is deployed in different regions.

I write this to a file called deploy.env which will be created in the system where the pipeline is being run. Normally, you can think of each step having its own encapsulated file system. Each file that you create or edit is usually lost when moving on to the next step in a process.

This is where the artifacts section comes in.

If you define the file in the artifacts section, this file will be persisted for all subsequent steps.

- step: &configure-promotion

name: Configure promotion

script:

- echo export REGION=’us’ > deploy.env

artifacts:

- deploy.envIf you make a change to a file and you want it to be reflected in the very next step of a process, make sure to include it in the

artifactssection

The next snippet is also a step, but this time I’ll dig into something called pipes.

Pipes

- step: &promote

name: Call promotion pipeline

script:

- if [ -f deploy.env ]; then source deploy.env; fi

- pipe: atlassian/trigger-pipeline:5.0.0

variables:

BITBUCKET_USERNAME: $BITBUCKET_USERNAME

BITBUCKET_APP_PASSWORD: $BITBUCKET_PASSWORD

REPOSITORY: 'my-promoter-app'

CUSTOM_PIPELINE_NAME: 'promote-pipeline'

PIPELINE_VARIABLES: >

[{

"key": "SOURCE_APP",

"value": "my-main-app"

},

{

"key": "REGION",

"value": $REGION

},

{

"key": "SOURCE_COMMIT",

"value": "$SOURCE_COMMIT"

}]

WAIT: "true"First lets look at the first part of the script section. It is a simple bash script that checks if a file called deploy.env exists. If it does, then it executes the commands from the file.

This means that if a preceding step has deploy.env as an artifact, you will have REGION=US. Otherwise, REGION will be undefined unless it is set as a variable in a custom pipeline or is explicitly defined in the script section.

Next, you will see a pipe called atlassian/trigger-pipeline:5.0.0.

This is a custom pipe already created by Atlassian that takes in a few variables and runs another pipeline programmatically. This is useful in cases where you have repos that have a particular job such as to promote apps to different stages and environments.

In the snippet above my-promoter-app is a repo that has a custom pipeline called promote-pipeline that will take the source app called my-main-app and promote its $SOURCE_COMMIT in the $REGION.

This practice of having separate promoter applications is common in real-world software projects.

There is also a WAIT variable that is set to true. This ensures that the promote step will wait until the promote-pipeline will finish. If it fails for some reason, the whole step will fail as well. You can read about this custom trigger pipe and its variables here.

Also note that $BITBUCKET_USERNAME and $BITBUCKET_PASSWORD are environment variables that you have to create and set. The docs explain how to set them.

Closing remarks

There is a lot more to learn about Bitbucket pipelines and what you can do with it. Do check out their docs for more information.

While they have terrific documentation, I wanted to share with you the big picture of why we use such CI/CD in the first place. Using a true-to-life example, I wanted to show you how exactly such tools can help us release stable and reliable apps.

This was one of the many questions I had back when I was getting started.

One last thing…

Whenever a pipeline fails, there might be something wrong in the implementation of the automated tests or there is a breaking change that was just merged which requires the pipelines and tests themselves to be updated.

But sometimes, a pipeline fails and catches a bug that crept its way through, despite code reviews and rigorous developer testing.

Sometimes it prevents downtimes, headaches and potential catastrophic scenarios.

It’s times like these when you will appreciate their use and thank yourself for taking the time to implement them.

I hope this helped.. Cheers!

Please find some Tutorials and Project here to working with Bitbucket!!!!!!!!!!

No comments:

Post a Comment