What is Rolling Deployment?

A rolling deployment involves gradually replacing an older version of the application with a new one. This Software Deployment strategy helps gradually replace the infrastructure running the application until the rolling deployment becomes the only version. It is the default deployment strategy in Kubernetes.

How Rolling Deployment Works

Traditionally, to update software running on a server, the application server was taken offline, software was upgraded, tested in a closed environment, and then restored into service. This resulted in a significant amount of downtime, which was costly and disruptive for businesses. To make things worse, if there was a need to revert the installation to a previous version due to an unexpected bug or issue, the same process was repeated, resulting in more downtime.

In a rolling upgrade, only a portion of the application server’s capacity is taken offline at any given time, meaning the update can be performed with no downtime.

Rolling deployments use the concept of a window size—this is the number of servers that are updated at any given time. For example, if a Kubernetes cluster is running 10 instances of an application (10 pods), and you want to update two of them at a time, you can perform a rolling deployment with a window size of 2.

For each application instance in the deployment window, it is updated, basic testing is performed, and Kubernetes validates that the application is working properly. The instances that were successfully updated resume service, and then other instances are taken offline and updated. The process repeats until all relevant pods in the cluster are updated to run the new version of the application.

This process ensures there are always instances of the application online and ready to serve user requests throughout the deployment process.

Defining a Rolling Deployment in Kubernetes

Let’s discuss a rolling deployment in Kubernetes terms. Rolling deployment is the default deployment strategy in Kubernetes. It lets you update a set of pods with no downtime, by incrementally replacing pod instances with new instances that run a new version of the application. The new pods are scheduled on eligible nodes (they may not run on the same nodes as the original pods).

To perform a rolling update, you first need to define a Deployment object in your Kubernetes cluster, specifying which pods participate in the deployment and the current version of the application.

Here is a simple example of a Deployment object. This object runs 10 instances of an nginx container image, using the container image nginx:1.14.2, and uses the RollingUpdate strategy (see the code in bold below).

You can set two optional parameters that define how the rolling update takes place:

- MaxSurge—specifies the maximum number of pods that a deployment can create at one time. This is equivalent to the deployment window. You can specifyMaxSurgeas an integer or as a percentage of the desired total number of pods. If it is not set, the default is 25%.

- MaxUnavailable—specifies the maximum number of pods that can be taken offline during rollout. It can be defined either as an absolute number or as a percentage.

At least one of these parameters must be larger than zero.

Once you apply the Deployment object in the cluster, you can trigger a rolling update by updating the pod image, using the command

Rolling Deployment vs. Blue/Green Deployment

In a blue/green deployment, you deploy two versions of the same application in parallel: the current production version, called the “blue” version, and a new version, called the “green” version. At any given time users see only one of these versions:

- Initially users see the original “blue” version, then traffic is switched over to “green”.

- If there are any issues, it is easy to switch the traffic back to “blue”.

- Otherwise, “green” becomes the new “blue” version.

Here are the key differences between a blue/green and a rolling deployment:

- In a blue/green deployment, traffic is switched over instantly to the new version, while in a rolling deployment this can take time.

- In a blue/green deployment, rollback is easy and instantaneous, while in a rolling deployment it can be difficult to roll back.

- In a blue/green deployment, you need to maintain twice the computing resources to run both versions simultaneously—this can require as much as twice the resources of a rolling deployment. A rolling deployment conserves resources by gradually replacing the original with the new version.

- In a blue/green deployment, there is never a case where different versions of the application are run in parallel, which is beneficial for legacy applications.

Rolling Deployment vs. Canary Deployment

Like rolling deployment, canary deployment helps make a new release available to several users before others. However, while rolling deployments target certain servers, a canary strategy targets certain users, providing them with access to the new application version.

Here are key differences between a canary deployment and a rolling deployment:

- Canary deployments provide access to early feedback from users.

- Users participating in canary testing find issues and help improve the update before rolling it out to all users.

- With canaries you have full control over who gets the new version, while this is not the case with rolling deployments.

- With canaries you have full control over the percentage of people who see the deployment, and you can even pause a deployment in the middle, which is not possible in a rolling deployment.

- Canaries require a smart traffic switching method, while rolling updates need a simple load balancer).

A canary is ideal for applications with a specific group of users more tolerant of bugs or better suited to identify them than the general user base. For example, you can deploy a new release internally to employees to test user acceptance before it goes public.

When to Use a Rolling Deployment?

Rolling deployments are suitable for applications that run on multiple server instances, in such a way that some servers can be taken offline without significant performance degradation. It is also useful for deployments with minor changes, or when there is a high likelihood that pods will update successfully and quickly.

However, rolling deployments can be problematic when:

- The new version has changes that can break the user’s experience or previous transactions.

- During the deployment, some users will have a different experience than others, which can create challenges for technical support and other teams interfacing with the application.

- Deployments are risky and there is a need to roll back. Rollback to the previous working version can be complex in a rolling deployment pattern.

- There is a need to roll out the new version quickly—rolling updates can take time and the total deployment time is sometimes unpredictable.

Kubernetes Progressive Deployment with Codefresh

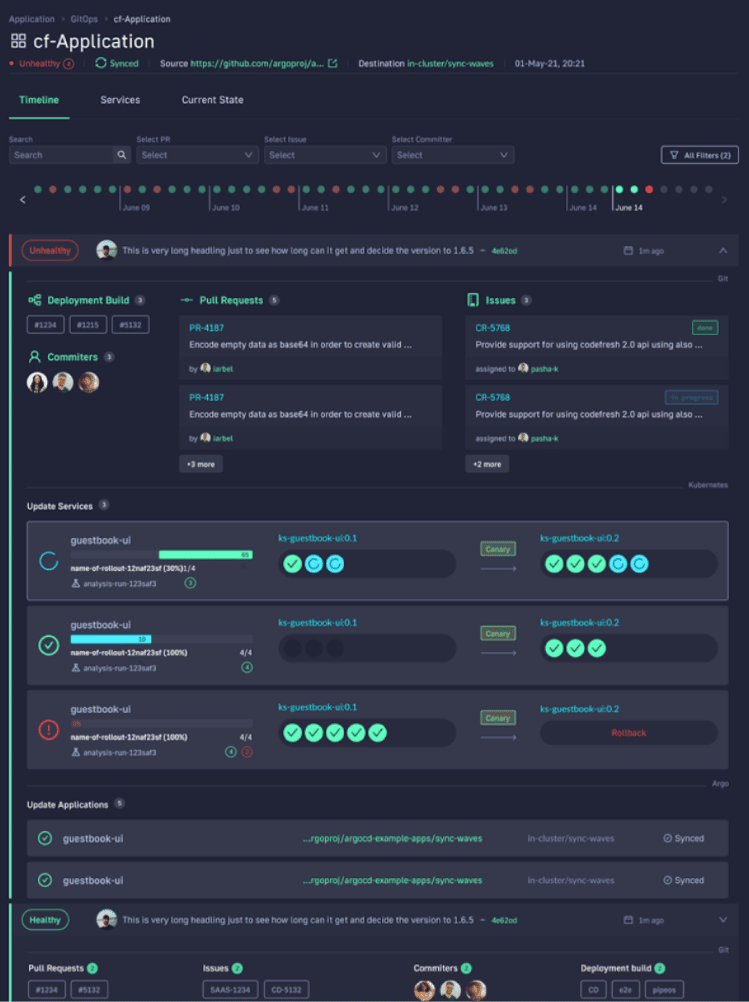

Codefresh lets you answer many important questions within your organization, whether you’re a developer or a product manager. For example:

- What features are deployed right now in any of your environments?

- What features are waiting in Staging?

- What features were deployed last Thursday?

- Where is feature #53.6 in our environment chain?

What’s great is that you can answer all of these questions by viewing one single dashboard. Our applications dashboard shows:

- Services affected by each deployment

- The current state of Kubernetes components

- Deployment history and log of who deployed what and when and the pull request or Jira ticket associated with each deployment

This allows not only your developers to view and better understand your deployments, but it also allows the business to answer important questions within an organization. For example, if you are a product manager, you can view when a new feature is deployed or not and who was it deployed by.

No comments:

Post a Comment